Test strategy, what a funny concept. Now this strategy isn’t going to help you win any battles (this is where the word strategy comes from after all) but for lack of a better well understood term, this blog post is a reflection on what I imagine will work for my team*.

*disclaimer: what might work for my team might not work for yours. People are amazingly diverse and your team and company context is fundamentally different. Also this here is a wish list of what I think will work. It’s subject to change as we learn and evolve.

Table of Contents

Context

First let’s set the scene. Our scrum team includes 1 Android developer, 1 iOS developer, 2 back end developers, 2 business analysts (1 is our scrum master), 2 testers and a team/tech lead. We are changing our team structure and I’ve come on board as a software engineer in test. Our team closely collaborates with the design team and they are included in our group email threads but don’t come to our retro’s. We have a 10 day sprint cycle that looks a little like this:

We have a daily standup, a few kick off meetings at the start of the sprint to lock in what we are working on for the next 2 weeks, some mid sprint review/next sprint refinement sessions and a few meetings at the end that help tie up what we’ve completed. Consider this a crash course in Scrum Agile if you will. Not everyone is required to attend all of these meetings and I won’t be covering these meetings in detail in this blog post.

Get to the Test Strategy

Yes I know, that was a rambling tangent but the context is important. Before I get into the good bits I’ll ask you a question;

Why do we even bother with testing?

Some people say, “to ensure the product works as expected” or, “to find bugs”, “it’s my job to test things” and these are all ok answers but they miss the point a little. Here’s my answer:

We test to get feedback on the state of the product. To help us answer the question, “are there any known risks with shipping this product to production?”

Paraphrased from conversations with Michael Bolton, the tester not the singer

Every part of the following strategy is all tied into facilitating feedback. The more timely and accurate the feedback the better.

Testing and quality is a team responsibility, it’s not just up to one person to be the quality gate keeper. My role is to help facilitate feedback

Layer One: The product design feedback loop

This is all a little out of scope of my teams day to day activities but this how our design team tests if we are building the right thing that users need.

User/Product Research

This might involve researching our market for current trends. How many of our customers care about their superannuation? What is their financial literacy? What type’s of problems are they facing? What are our competitors doing and how does their experience deliver value?

Wireframe/Design prototyping

Eventually someone will need to start sketching out some design ideas. What’s the user flow through a particular feature?

User Testing

This won’t happen for every new design, for example log in hasn’t gone through this process. Our new big features will go through this type of testing. This helps get feedback on the design and layout. Does it all make sense?

Design and user story creation

Out of all of the work, eventually the design team and the business analyst will work together to create acceptance criteria, refine the UI and get the rest of the team up to speed with the context of a feature. Our user stories and designs are usually shared on a confluence page and linked to Jira tasks. We use a GIVEN WHEN THEN structure for our user stories.

Layer Two: The code feedback loop

Exploratory Testing

All testing is exploratory in nature, its front and centre. It’s across everything we do. chaos engineering is a type of it as well as building the code locally. We use our skills, plans and judgement to determine when and how much testing is needed at any point.

Experience Testing

When we do the code review we will do exploratory testing based on the risk of the feature. Time boxed to a session or two depending on what has been built. We will look at the user stories, brain storm any more edge cases and consider if they are worth testing. Checking if the experience of the feature makes sense and if there are any ways people can get into some sticky unexpected situations.

Unit Tests

As a developer builds a feature, they will create unit tests based on the user acceptance criteria. Developers will use the tools they are most comfortable with the write these tests. If you’d like to read more Martin Fowler has this blog post on Unit Testing.

UI Automation

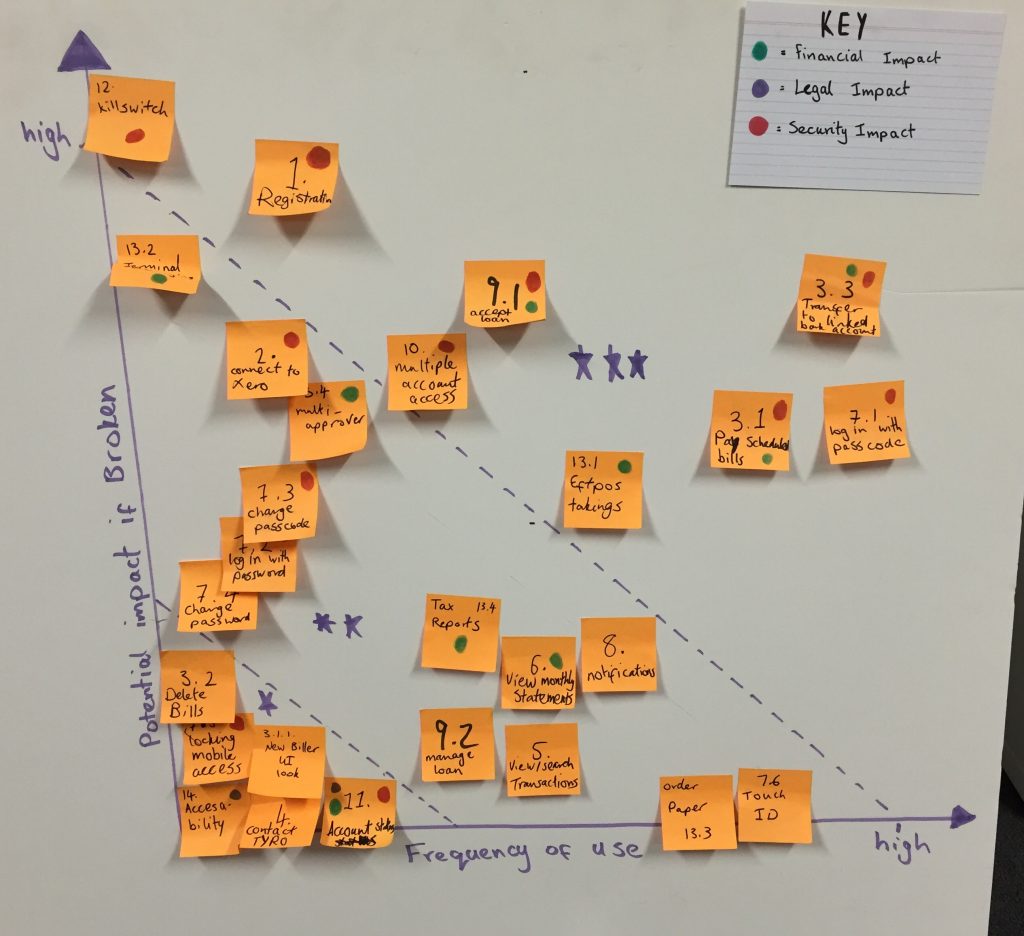

I have my visual risk board next to our team which we use to prioritise how much testing we build at this layer. We use Espresso for Android and XCUITest for the iOS app.

“Why not Appium?”, I hear you ask

Simple, when test code lives in a repository outside of your production code, you decrease collaboration with the whole team. Also you can’t easily run your appium tests during pre commit testing or locally as a developer. You can follow the interactive visual risk for UI automation exercise here to understand more.

Code Review

When a new API is being developed, I’ll often pair with the developer to do a code review. We will talk about the architecture, brain storm testing ideas, do a bit of testing (usually through postman if we are testing an API), we will chat about test coverage. Is it adequate? Is there any thing missing? Can we see the tests fail under the expected conditions?

If it’s a front end feature, I’ll check out the code locally and use a different emulator/simulator than what the developer uses. I’ll give the feature a good shake out and check the test coverage. I’ll also test for accessibility if it’s a new front end feature.

Mock Testing

For our mobile app, we are able to do most of our code review testing without ever talking to a backend. The engineers have built some mock servers into out apps, when the app would call an API, our mock server returns a canned response. This helps us test that the UI and the flow hangs together even when test environments aren’t available. If you’d like to read more, check out this article on mock testing for android or this one for iOS.

Build Pipelines

We have different pipelines for different applications. We are using TeamCity as our Continuous Integration tool. Generally all of our unit tests and UI tests will be run. Maybe our contract tests. I have a few other ideas to increase the value from our build pipelines that I’ll talk about in Chaos Testing. If our main builds start failing, we won’t release the software.

Device Coverage

We don’t necessarily focus on doing device testing for each feature that comes through. I try to pick a different emulator/simulator than what the developers do. I always make sure features get tested on a Samsung. Some features, if they are 3 star features from our risk analyst we will spend more time testing on a wide variety of devices. We currently have an on premise mobile device cloud server delivered by Mobile Labs. If you don’t have a device cloud, you could set up your own device farm.

Why Samsung?

Samsung has a wide market saturation and they always do funky stuff to the android UI. The android emulators are awesome at vanilla android. However, most people out there aren’t using vanilla Android :(.

Contract Testing

We are moving towards having contract testing in place that lets us know if an API starts to break, if someone changes the JSON payload in an API our contract will break and someone will know the have more stuff to clean up. We don’t have contract testing for our mobile app yet but some of our downstream micro services are starting to build these. If you’d like to find more, read this article by Martin Fowler.

Test Environment

We have an integration test environment where our code is being constantly deployed into. Sometimes it can mean an API is down because it’s being deployed. We do a lot of our API testing in this environment.

Chaos/Crash Testing

With android there’s this command line tool called chaos monkey. This tool is a UI exerciser, it throws random user input at your UI to try and find where it crashes. I’m hoping to include this in a build pipeline for an overnight build. Run it for a few hours on an android device and see if it crashes. The next night, do the same thing but on a different device/os combination. This will give us reasonable device testing over a sprint. I don’t know of a similar tool for iOS. You can read more about chaos engineering on wikipedia.

Layer Three: Shipping the product feedback loop

Bug bash

A few days before the end of the sprint, our team and invited guests will sit down and do some exploratory testing on the features that have just been built. If anyone wants to explore a new API that’s been built, they can. If they’ve had their head in unit tests lately, they have the chance to explore some of the new UI. You can read how to run a bug bash to find out more. If major bugs are found here we won’t release the software. We might do a mob programming session when we don’t have enough features for a bug bash.

Demo

On the last day of the sprint we will demo our features to a broader audience. Feedback is gathered and turned into Jira items/research for the design team.

Internal release

Then we release to internal staff members. Many other companies call this “eating your own dog food”. This gives people the chance to raise more feedback before we put the product in front of customers. You can read more on wikipedia here.

Beta Release

We can release our app to our high value or digitally savvy customers who want to ahead of the curve. This is a customer engagement strategy as well as a test strategy.

Percentage roll outs

The google play store allows you to do percentage rollouts. Say you rollout to 5% on the new version, monitor production for any new crashes or customers complaining. If it’s all smooth for a few days you can continue the rollout to 50% and then 100%. The google play store allows you to roll back if major bugs do occur. The apple play store has a similar feature.

Monitoring in production

What metrics should be communicated back to the team? How can we respond to issues in production? I like this quote from a 5 minute google talk back in 2007:

Sufficiently Advanced Monitoring is Indistinguishable from Testing

Ed Keyes

Layer Four: Supporting the product feedback loop

Supporting devices

We should support all of the devices that 80% of our market uses. We will be support from Android 6 (marsh-mellow) and from iOS 11. There probably will be some obscure android devices out there that don’t play nice with our app. Android is a beast like that.

Facilitating customer feedback

There will be an easy way for customers to provide feedback in app. I have some ideas on how to make that experience better but there are privacy concerns to consider. We will also be monitoring our google/apple play store reviews for bugs.

Triaging feedback

Someone should be monitoring all of this feedback, attempting to reproduce bugs if customers are facing them and raising them in the teams backlog for prioritisation next sprint.

Soap Opera Testing

Maybe in the future, we could try some soap opera testing with the business? Soap opera testing is a condensed and over dramatised approach to testing. What are the wackiest scenarios our customers have actually tried? How does our system break? You can read more about this exercise here.

Why the layers?

Consider each of these layers like a net. It won’t catch everything, bugs in production will still happen. But when we have all of these feedback loops layered on top of each other, we get a pretty tight net, where hopefully no major issues get into production.

What about auditing or compliance?

Our source of truth is the code, Jira and Confluence. When we have all of it integrated, we can prove we tested a feature thoroughly without too much extra overhead. An auditors mindset and a testers mindset are very similar. Testers are concerned with product risks, auditors are concerned with business risk.

Their main question is, “did you do what you say you do? Did you follow your process?” and, “Is the existing process adequate?”.

Where are your test cases?

Michael Bolton has a 7 part series on breaking the test case addiction. You can read series one here. You don’t need test cases to prove you did adequate testing. They create unnecessary overhead that detract from adding business value.

What else is missing?

Security testing is not included in this test strategy. Neither is performance testing. Getting these included can be challenging. I’m open to your suggestions in how I can incorporate this type of feedback in a timely manner.

What else would you add to your test strategy?

Really helpful article. This will help a lot to our mobile app developers. Keep it up.

Best Mobile App Development Company Dubai

You shared a wonderful article and it is very useful to the app development companies and Thanks for sharing

As a mobile dev, this is very helpful to me. Thanks Sam for sharing it!

Nice article , very informative .

This is an interesting article. Very knowledgeable thank your sharing.

Awesome blog. Loved it!

Really nice article. May I ask if you have any test strategy document with test automation estimation?

No, but I had this presentation on test strategy gaps and a timeline of when we wanted to fix some of the gaps, which involved enhancing our CI/CD pipelines for better test coverage:

https://bughuntersam.com/mobile-app-test-strategy-gaps/

When working in an agile way of working, we only estimate small tasks that can fit in a 2 week sprint cycle. If we were working on enhancing our automation we’d add a small story to our board and work on it in sprint. If a story was 8 points it would take the full 2 weeks to do. We tried to break these types of stories into smaller chunks.

Today, mobile applications have become the primary business enablers for all industries across domains. Most businesses invest heavily in mobile apps to enable high-performing mobile apps for ensuring a great User Experience (UX).

To read more about mobile testing please follow: https://www.testingxperts.com/blog/mobile-app-testing-guide

Thanks for the link, however I disagree with the benefits of mobile app testing for businesses. Testing alone does not enhance the UI/UX, it doesn’t improve customer satisfaction or deliver functional software.

The act of software testing helps us get information about the product. A business could choose to not action any feedback raised from testing.