If you had seen my tales of fail blog or my career growth over time, you may notice I’ve started a new tech role on average once a year since I started in tech over 10 years ago. There’s been a series of 6 month contracts, redundancies and company misfits. The most I’ve stayed with… Continue reading Getting up to speed on a new team

Category: Agile

How to build a mobile app

You’ve got an idea for a mobile app. Good for you. Unfortunately so do lots of people. There are millions of apps on the app store that are never successful. Ideas are cheap, execution is key. This blog is how you can go about execution for a mobile app, this process won’t guarantee success but… Continue reading How to build a mobile app

Improving your testing skills

Today we are going over how to improve your thinking and software testing skills. Slide deck can be accessed here. Why do we bother testing? To get feedback. To help us answer the question, are we comfortable releasing this software? Read my mobile app test strategy here. There’s no manual testing We don’t say manual… Continue reading Improving your testing skills

Where are they now?

Have you heard of the Agile Manifesto? It was published in 2001 when 17 blokes who work in tech came together to come up with a consolidated way of working. They came up with 12 principles which still hold up today. It’s worth a read. This blog is digging into the archives and asking the… Continue reading Where are they now?

Metrics and Quality

The superannuation and investment mobile app I’ve been working on over the last year has finally been released. It’s been on the app store for just over a month now* and this blog is about how we are using metrics to help keep tabs on the quality of our app. *You can download the app… Continue reading Metrics and Quality

Tails of Fail

Today I gave a talk at TiCCA (Testing in Context Conference). The talk topic was tails of fail – how I failed a quality coach role. It’s a story of how I tried out this quality coaching thing but I didn’t pass probation. You can access the slides here. I used slido to manage the… Continue reading Tails of Fail

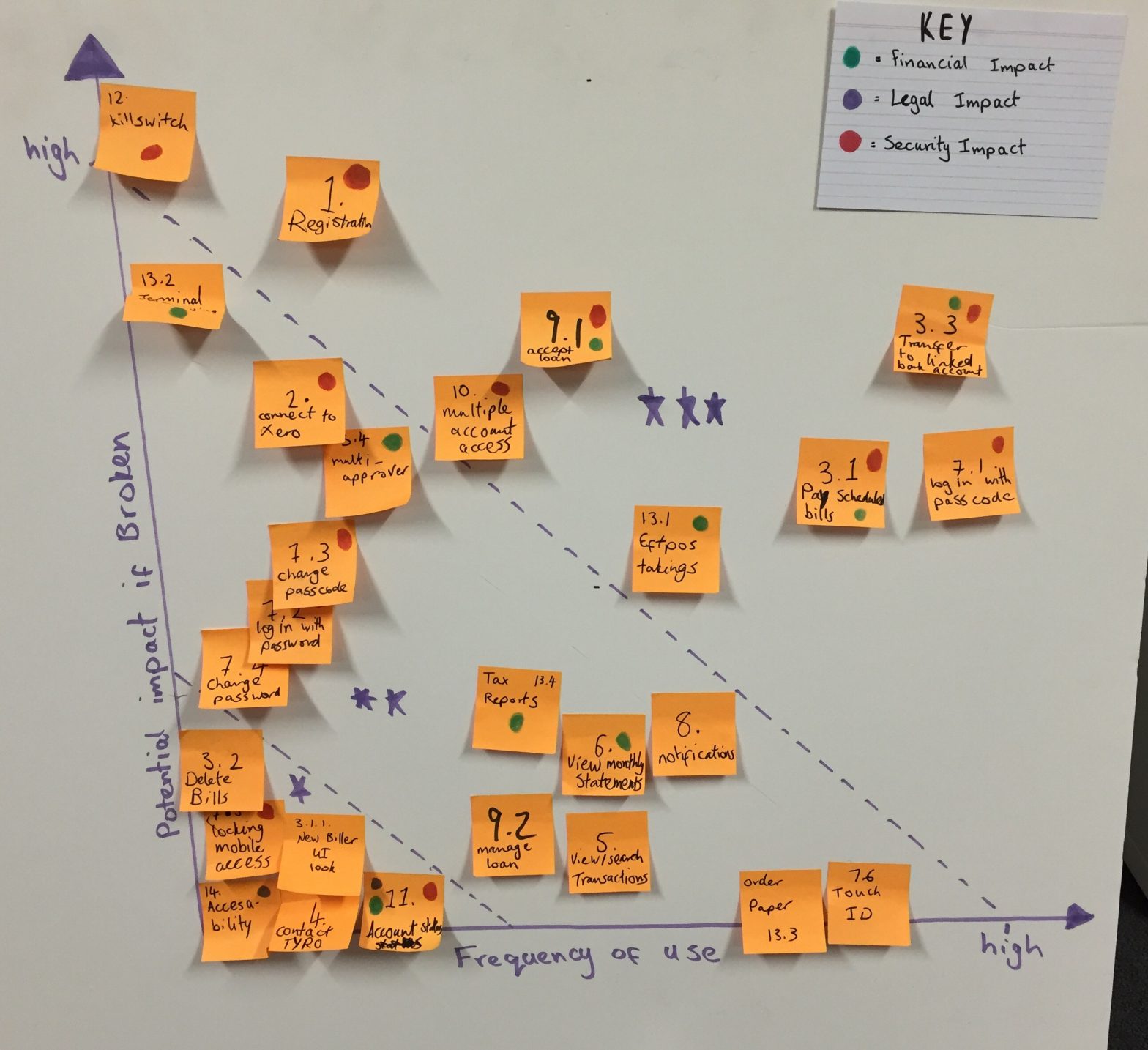

Visual Risk & UI Automation framework

Have you wanted to start with automation testing and not known where to begin? Or maybe you have 100’s or thousands of test cases in your current automation pipeline and you want to reduce the build times. Here I will walk you through one way you could consider slicing up this problem. Using examples from… Continue reading Visual Risk & UI Automation framework

Becoming a Quality coach – course overview

I had the pleasure of doing Anne-Marie’s becoming a quality coach course today which was organised by Test-Ed. If you are looking to transition to a quality coach role it’s worth keeping this course on your radar. Anne-Marie is a well renowned expert in software testing and quality engineering. I had the pleasure of working… Continue reading Becoming a Quality coach – course overview

What’s with the coach titles?

Agile, Quality, Life, Career … everyone wants to be some sort coach these days. I don’t like the term coach. I feel like it downplays the hard work that goes into teaching. I learn and I teach what I learn. I love teaching so much I often do it for free. During uni I volunteered… Continue reading What’s with the coach titles?

Agile Australia 2018

On Tuesday the 19th of June I spoke at Agile Australia( access the recording here) on how to get more people involved with testing. You can access my slides; The bug hunt is on. I proposed 5 activities to help get more people involved with quality: Bug Bashes Bug Bounties Dog Fooding Knowledge sharing practices… Continue reading Agile Australia 2018